Our personal data powers a trillion-dollar industry, over which we have no control. So how, in an age where the use of data is both ubiquitous and mistrusted, can the digital industry gain the trust of consumers?

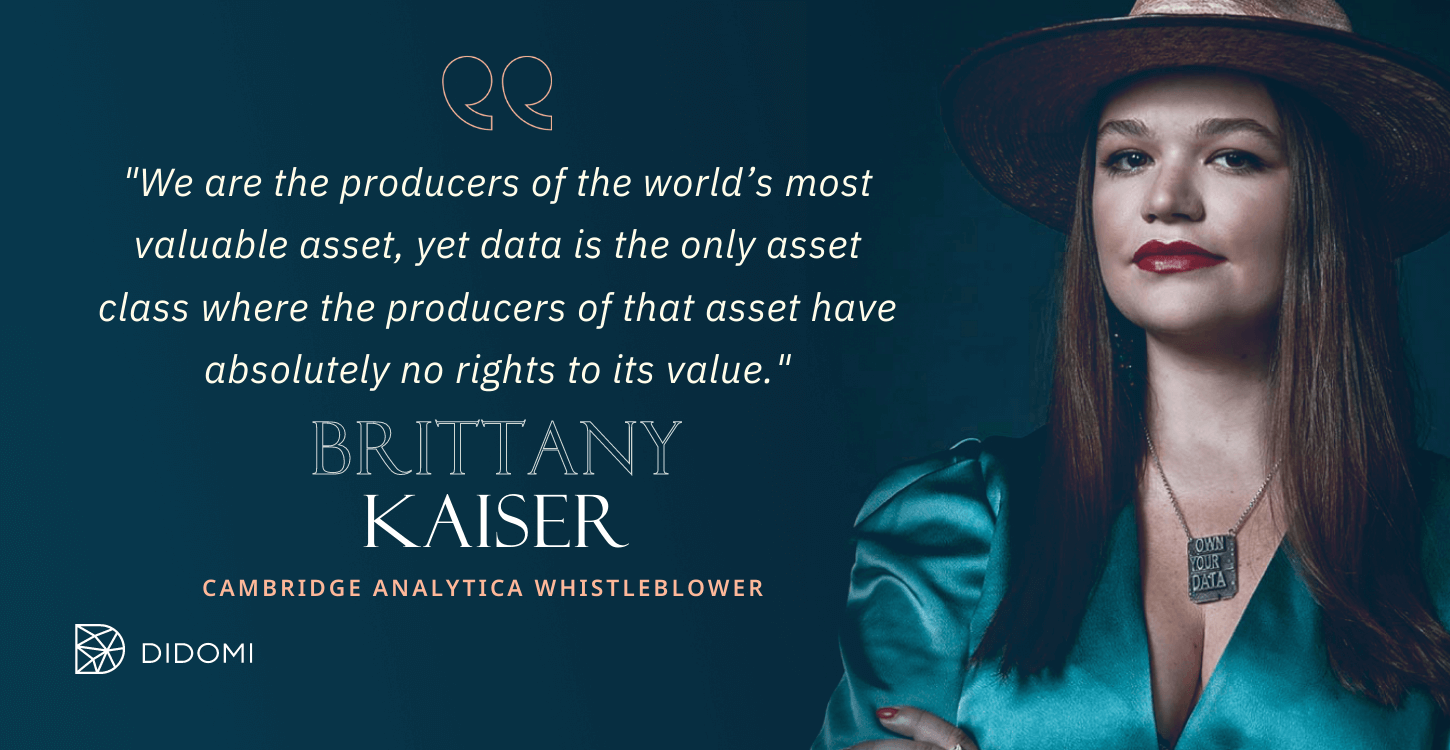

These are just some of the issues addressed by globally renowned data protection expert and Cambridge Analytica whistle-blower Brittany Kaiser in her keynote speech for the recent Yes We Trust Summit, a worldwide, 100% digital privacy event initiated by Didomi to help people understand and inspire trust in the internet age.

Summary:

Why does digital trust matter?

Data has become the world’s most valuable asset. Our personal data - the information we create about every move we make, the places we go, the people we speak to, our interests, what we like to interact with – is part of a multi-trillion-dollar industry that fuels every decision made by private and public organisations.

But it’s the only asset whose producers have absolutely no rights on its value. Our personal data is bought and sold around the world without our explicit consent, to be used for purposes we don’t understand and certainly didn’t agree to.

.png?width=2400&name=YWT%20%20Social%20posts%20(23).png)

Over the past couple of decades, technology companies have concentrated on ways of extracting as much information on us as possible. Trust and transparency were never part of the equation, however. In fact, most legacy technologies were designed to ensure we didn’t understand just how much personal information we were giving away.

Some of these companies – Amazon, Google, Facebook, Uber – are now worth hundreds of billions of dollars – a value that comes from an asset class that we, as their users, have produced. As the saying goes, if you don’t know what the product is, then the product is you.

This model is unsustainable, however. It doesn’t contribute in any way to a trusting relationship, and businesses of the future won’t be able to continue down this path without the trust of their users.

So, what can companies and governments do to gain that trust?

What are the components of digital trust?

We’re entering a world where technological developments like IoT, AI, and smart cities mean we’ll produce more data than ever before, and powerful predictive analytics will be behind most of the decision-making that happens in our lives.

It’s essential, then, that we figure out what our data rights are. We need to dedicate ourselves to talking not just about privacy, but also data protection, permission, and, of course, digital trust.

Digital trust is made up of many different components – transparency, consent, accountability, ownership and, more recently, sustainability.

Transparency – To trust a company or government, you need to know exactly what it’s going to collect about you if you engage with its platform. Cambridge Analytica, for example, famously collected the personal data of millions of Facebook users – without their consent - in order to manipulate the outcome of the US election and Brexit campaigns.

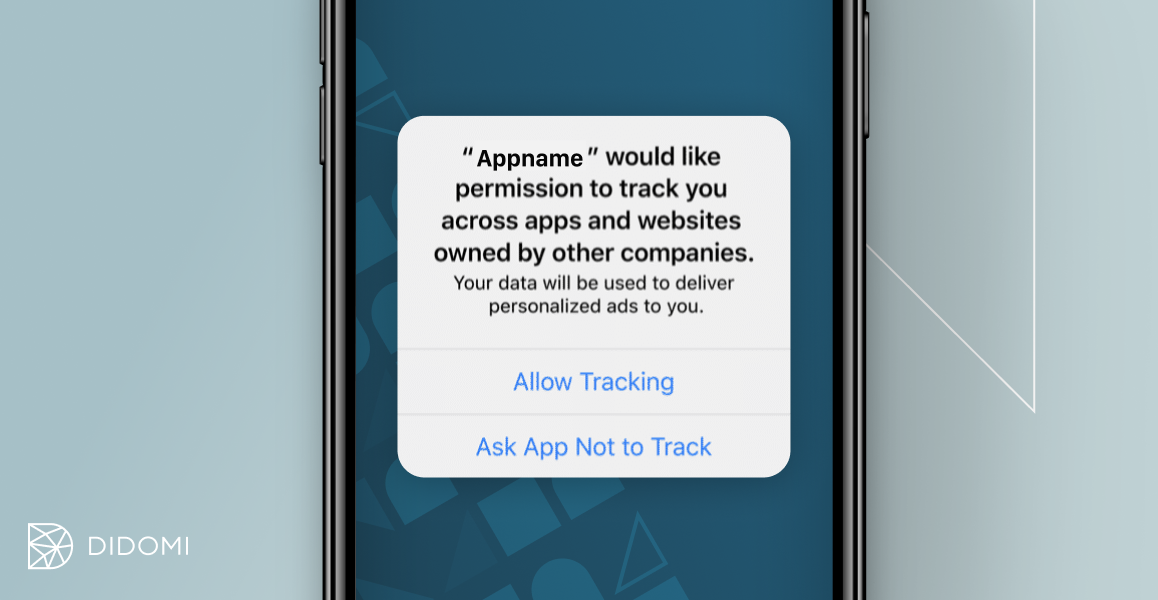

Most companies aren’t transparent about what’s being collected. If you read the T&Cs of the mobile apps you use every day, you’ll probably find you’re agreeing to give companies or developers access to your photos, videos, and other data, your live location, and perhaps the right to turn on your phone’s camera or microphone – even if you’re not using the app.

Rather than pages of legalese deliberately designed to obfuscate, companies should instead tell us what data they’re going collect – clearly, in plain English, and in bullet points.

Consent – It’s one thing to have transparency, but we also need to be able to consent and agree to what’s been outlined. As well as knowing what data points are being collected, we need to know where that data’s going, who it’ll be shared with, and what they’re going to use that data for. Only by knowing this can we really give true informed consent.

Accountability – For a business to be accountable for collecting and using people’s private information, it needs to ensure there is traceability around where that data goes. New technologies, like blockchain, help to make accountability part of the digital trust equation, allowing us to track and trace every company our data has been shared with.

Ownership – First and foremost, data should be owned by the individual. Then, when that individual interacts with a platform, as long as it provides value by helping the individual monetize or create value from their data, the platform itself should own a percentage – perhaps even the larger proportion – of that data.

But, every time the platform sells that data on to advertisers, the individual should receive a percentage of the revenue. That’s what ownership means – taking part in this multi-billion-dollar value creation, and actually owning the value we’re producing.

Sustainability – One of the largest conversations currently happening in the technology community is around the sustainability of large-scale data centres. When so many of the technologies we use in our digital lives eat up huge amounts of energy, how can we ensure the technology revolution can support climate change goals of every country around the world?

Why is data protection more important than privacy?

The word privacy makes people feel that, by sharing their data, they’re not safe.

The fact is, by striving for data protection rather than privacy, we can enjoy the transparency, consent, ownership, and monetization rights outlined above, while agreeing to safely and securely sharing our data, and getting our slice of the pie.

.png?width=2400&name=YWT%20%20Social%20posts%20(25).png)

Indeed, we’re starting to see a recognition of this in the marketplace. There are a growing number of data solutions, like Datacoup and UpVoice, which allow you to earn through anonymously sharing your data. By giving true informed consent to securely and safely sharing your personal data, you can become a data input into the algorithms that produce trillions of dollars of value around the world, and be given credit for that.

Conclusion… it’s time to think about our data rights

Companies need to understand that increasing digital trust will encourage people to produce more data than ever more. Right now, though, the lack of informed consent means we’re living our digital lives without realizing just how much data we’re generating and sharing, and how it’s being used.

By demonstrating how Cambridge Analytica could gain access to such a huge amount of our personal information and use it to undermine the democratic process, Brittany Kaiser highlighted the need for us to think more about our data rights - the rights to our personal information.

To paraphrase Professor Stephen Hawking, the point of all the technologies we build is to improve our lives. When creating those technologies, then, it’s vital that we build something that’s trustworthy, transparent, consensual, and that gives ownership back to individuals. Only then will we truly achieve digital trust.

This is why Didomi is a founding sponsor to Yes We Trust - to help people understand and inspire trust, and to thrive in a privacy-conscious world.

Thank you to Brittany Kaiser for speaking, and to all participants for taking part. Find replays and further information on the Yes We Trust website.

.png?width=1200&name=YWT_Speakers%20(rectangle).png)

.png?width=3000&name=YWT%20%20Social%20posts%20(24).png)